From cutting-edge technology exploration at the World Artificial Intelligence Conference (WAIC), to initial implementation trials in s...

From cutting-edge technology exploration at the World Artificial Intelligence Conference (WAIC), to initial implementation trials in specific scenarios at the World Robot Conference (WRC), and further to the focused examination of motion control and hardware systems at the World Humanoid Robot Conference (WRC), culminating in the recent technology integration trends showcased at the Yunxi Conference, humanoid robots have rapidly ascended to become a core track in global high-tech competition. Although most demonstrated models at the current stage can perform basic mobility and grasping tasks, widespread systemic bottlenecks persist in real-world scenario interactions. These include rigid interactive experiences, weak scenario adaptation capabilities, and a lack of autonomous cognitive decision-making. These limitations prevent robots from breaking free from manual remote control and hinder their critical transition from "demonstration prototypes" to "practical products."

Tracing back to the root, the core issues behind these industry bottlenecks lie in the "broken data loop" and "deficient modality dimensions" at the perception layer. Current mainstream robot solutions still widely rely on single sensors to build environmental perception systems, which inherently lack multi-dimensional perceptual capabilities such as depth information and auditory interaction. This makes it difficult to form continuous, complete multimodal data streams. Incomplete perception, in turn, triggers a chain of problems—increased ambiguity in semantic understanding, insufficient action generation precision, and decreased reliability in environmental interaction. Ultimately, this leads to robot systems being highly dependent on external remote control commands, unable to achieve truly autonomous decision-making and closed-loop control in open environments.

To empower humanoid robots with human-like capabilities—"autonomous environmental exploration, dynamic semantic understanding, precise human-robot collaboration, and delicate task execution"—it is essential to make fundamental breakthroughs in the key technologies of "multimodal perception fusion" and "cognitive-decision linkage." The OmniHead humanoid robot head module serves as the core hardware载体 that integrates the "Perception–Cognition–Decision–Execution" architecture, providing critical support for building this capability system.

![]()

OmniHead : The Core Architecture of Humanoid Robot Multimodal Perception.

At this critical juncture where global humanoid robots are transitioning from functional demonstrations to practical application, OmniHead emerges as the first integrated head module specifically designed for humanoids. With "multimodal fusion perception" and a "hardware-software integrated architecture" at its core, it systematically reshapes the robot's environmental cognition logic and interaction paradigms. Its fundamental value lies in closing the full-chain loop of "Perception–Cognition–Decision–Execution": through the deep integration of visual, auditory, and AI reasoning capabilities, it addresses the structural shortcomings in current perception layers—such as incomplete data dimensions, insufficient temporal synchronization accuracy, and limited depth of semantic understanding—thereby advancing robots from "passive task executors" to "active environmental interactors."

Traditional robotic perception systems often rely on single or loosely coupled sensors, making them prone to fragmented perception, modality disconnection, and incomplete environmental modeling. In contrast, OmniHead, through deep hardware integration and synergistic algorithmic fusion, constructs a human-like cognitive foundation capable of "full-dimensional perception, synchronous understanding, and intent inference." It not only resolves common industry challenges—such as lack of depth perception, asynchrony between auditory and visual signals, and semantic understanding ambiguities—but also provides underlying data support for building high-quality robotic behavior databases and training large language models.

![]()

I. Technical Architecture & Core Breakthrough: Multimodal Fusion Drives Perception Dimensionality Elevation

The system capabilities of OmniHead are built upon three technical pillars, addressing the three core requirements of "Perceptual Integrity," "Temporal Consistency,"and "Cognitive Comprehensibility":

1. Full-Dimensional Perception System: Building 3D Real-Scene Understanding and Sound Field Interaction Capabilities

High-Precision RGB-D Vision System:Equipped with multiple 1920×1080 global shutter cameras and infrared depth modules, it achieves sub-centimeter depth perception synchronized with high-resolution color imaging. Its point cloud density and stability significantly outperform existing solutions, efficiently supporting 3D obstacle detection, fine-grained object recognition, and scene semantic reconstruction.

Panoramic Surround Coverage:Multi-camera collaboration enables a 360° horizontal and 90° vertical field of view without blind spots. Combined with visual SLAM technology, it achieves real-time localization and navigation in dynamic, unstructured environments (e.g., living rooms, industrial workshops), ensuring spatial integrity for mobility decisions.

High-Robustness Auditory System:Integrated 6-microphone circular array features beamforming, sound source localization, de-reverberation, and noise suppression. It enables high-precision voice capture within 5 meters and multi-speaker separation, maintaining over 95% speech recognition accuracy even in noisy environments (e.g., malls, offices), truly achieving "clear hearing and accurate identification."

2. Cross-Modal Synchronization Mechanism: Achieving Millisecond-Level Spatiotemporal Alignment

Utilizing hardware-level timestamp synchronization technology, OmniHead achieves millisecond-level alignment of visual, auditory, and inertial data. This fundamentally resolves the "perception-action" coordination disorder caused by cross-modal signal delays. In typical scenarios, the system can locate in real-time by combining sound source direction and visual targets ("see what is heard"), or assess path feasibility using fused audio-visual signals in occluded scenes, outputting semantic prompts (e.g., "Obstacle detected ahead, suggest bypassing on the right").

3. Cognitive Decision Empowerment: From Perceptual Data to Semantic Understanding

The built-in large AI model performs joint semantic parsing of multimodal inputs, possessing capabilities for scene attribute recognition, human behavior intent understanding, and task context reasoning. For instance, in elderly care scenarios, the system can simultaneously parse "an elderly person's rising action" and "a call for help," accurately and proactively determining the need for assistance and triggering aiding actions, achieving the cognitive leap from "environmental signal capture" to "interaction intent understanding."

II. System Integration & Development Support: Balancing High-Performance Hardware and Open Architecture

To accelerate technology adoption and ecosystem co-development, OmniHead optimizes both hardware reliability and developer-friendliness, lowering the barrier to industry application:

High-Reliability Hardware Design:The vision module employs global shutter and wide dynamic range (WDR) technologies to adapt to extreme lighting conditions (low light, strong light). The entire unit features vibration resistance and electromagnetic interference (EMI) immunity, meeting deployment requirements across various scenarios like industrial manufacturing, commercial services, and home companionship.

Open Development Ecosystem:Provides a complete SDK and API interfaces, supporting multi-level data outputs including RGB-D raw data, point clouds, sound source azimuth, object detection bounding boxes, and semantic segmentation results. Developers can directly utilize perception results to build custom business logic (e.g., industrial inspection rules, home service workflows), train scenario-specific models, or interface with third-party motion control platforms, significantly shortening R&D cycles and reducing integration costs.

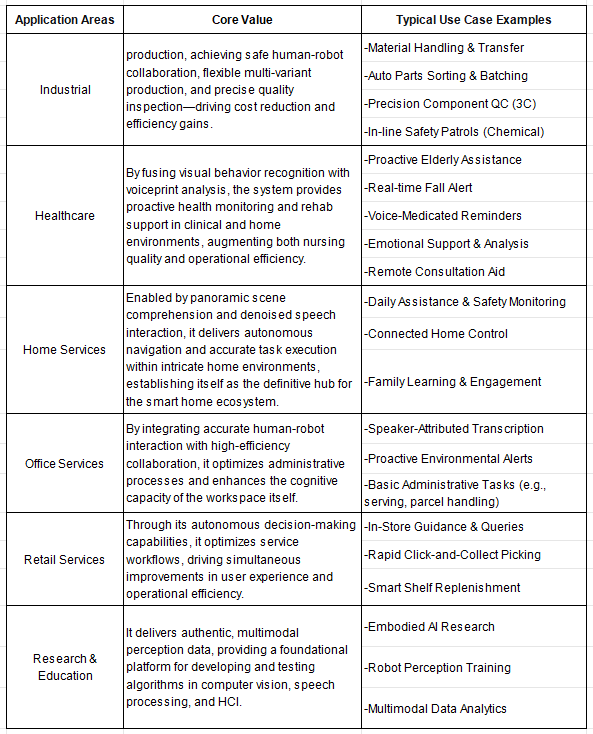

Diverse Application Scenarios:Empowering Embodied AI Innovation Across Industries

OmniHead provides robots not just with the sensory organsto perceive the world, but with a cognitive enginefor understanding and decision-making through multimodal fusion. It empowers Embodied AI across six cutting-edge fields, translating potential into tangible value.

![]()

![]()

From Industry to Commercial: OmniHead Ushers in a "New Era of Interaction" for Humanoid Robots

As a wholly-owned subsidiary of LANXIN ROBOTICS, VMR Technology leverages its years of expertise in the field of mobile robotics to adapt industrial-grade perception capabilities for the humanoid robot sector, introducing the head module, OmniHead. Built around a core of multimodal fusion perception and supported by an open system, this module not only addresses critical shortcomings in current humanoid robots—such as the completeness of perceptual dimensions and the depth of cognitive decision-making—but also establishes a scalable, highly compatible perceptual foundation, paving the way for the scalable implementation of embodied intelligence.

Looking ahead, OmniHead will continue to evolve, guided by the principles of "High Performance, High Availability, and High Openness."It aims to support research institutions and enterprises in building smarter, more human-like, and more integrated robotic systems, genuinely advancing humanoid robots from technical demonstration prototypes to practical, everyday scenarios, ultimately becoming indispensable collaborative partners in human work and life.

粤公网安备 44010602003952号

粤公网安备 44010602003952号